Choosing to be a Monolith, Cloud-Native or Cloud-Agnostic

Today’s expectations of modern applications are growing and if you think of creating a new application you will have to make lots of decisions that are not related to the problem you’re trying to solve. It’s more of common infrastructure needs that have to be satisfied in almost every application. Whether you’re building it in .NET, Java, Node.JS, Python, or Go, these needs are universal. Whether you’re building a monolith that will be hosted in one server, on-premise. Or whether you’re building a cloud-native, distributed, microservices, global-scale solution. The needs are similar, but the differences will be in how challenging they are, and how to solve for them.

Although I’ll use examples for .NET or Microsoft Azure to illustrate my point. This article applies to any technology/vendor.

First, let’s take a look at what are these infrastructural needs (sorry for the long list, you can skip if you get the point):

- A way to log and be able to troubleshoot issues

- A way to monitor metrics for performance and failures

- A way to authenticate users and validate their tokens

- A way to authorize user requests

- A way to access storage or database resources

- An exception handling policy

- A way to handle transactions and rollback failed operation midway

- A way to cache data in memory

- A way to send/receive messages through queues or message bus, or maybe you call them domain events

- A way to communicate with other APIs resiliently (surviving intermittent connection failures)

- A way to do background jobs or long-running processes

- A way to store an audit log of who did what when

- A way to report health status

- A secure way to access configurations

- A way to route requests to their right handler/processor/controller/service

- A developer-friendly page for documentation and getting started

- A way to scale and load balance traffic to multiple instances of your application

- A failover plan to recover from a data centre disaster

Now looking at how to satisfy these needs, in my opinion, there are 3 different approaches that we can take:

Embedded inside your application

Look for a way (a library or SDK) that allow you to embed the above functionality inside your application that is usually language-specific.

If you are in the .NET world these are examples of things you could use with this approach:

- Serilog or NLog to log in files or DB

- Middleware to validate authorization tokens

- ASP.NET Identity for authentication/authorization

- Middleware to handle exceptions

- Entity Framework for SQL Server or Mongo SDK for MongoDB or other NoSQL alternatives

- In-memory cache provider

- HangFire or Quarks for background jobs

- Polly.NET for resiliency (retries and circuit breaker)

- Swashbuckle for Open API (formerly Swagger) documentation

- Put configuration in appsettings.json file

- It will work well when you host it locally on your dev machine, on-premise server, or a VM on the cloud. This approach will work well for a monolithic application, where you have one language, one technology. This makes your app thicker but will allow you to host it anywhere without many dependencies.

But once we think about microservices architecture, where we can have many small services, sometimes written by different teams using different technologies. You start getting fragmentation, where you have each service uses different libraries, different versions, different policies. A change in how we do any of these things will require a roll-out plan to apply to each service. You will start trying to make them consistent, pouring more effort into governance, reviews and inter-team communication and decisions. And you will start asking why are we repeating this code into each individual service and why aren’t we getting these infrastructure needs from the underlying platform.

So now comes the next approach.

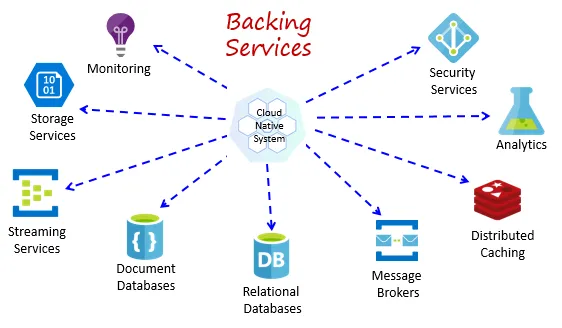

Cloud-Native

Offload these infrastructure elements to outside of your application, using platform as a service “PaaS” features of your cloud vendor of choice. Build your microservice as a minimal API that only does the business logic that it’s supposed to do. And then add everything from outside taking advantage of the cloud capabilities.

For example, if you’re using Microsoft Azure, here is what you will do:

- Azure Active Directory for authentication

- API Management to authorize and route requests traffic and to provide a developer portal

- Azure App Service to host and auto-scale your app

- App Insights and Azure Monitor to monitor and troubleshoot your application

- Azure Blob Storage for file IO

- SQL Azure or Cosmos DB for database

- Hosted Redis Cache for memory cache

- Azure Key Vault to keep configurations

- Azure Web Jobs or Azure Functions for background processes

- Azure Service Bus for queuing and Pub/Sub

- Rely on Azure for Network Security/Firewalls/Failover

Notice I didn’t mention any .NET specifics so the above will work for microservices built in any language, and will provide you peace of mind from managing a lot of infrastructure elements. It also makes sure that all microservices will behave consistently. There will be one place to manage authentication, one place to see logs, one place to put data. And if there is any change that is required in any of these aspects, it will be applied to all services by default because they already rely on the underlying platform and it’s one platform. This approach also has the benefit of adding Serverless capabilities, which mean you get to host rarely run features of your app only paying as you use it.

But at the same time, it made you locked in, to hosting your app on Azure. So you don’t get to run your app on your laptop for demos or offline development anymore, you don’t get to host it on-premise, and you can’t move it to another cloud vendor if you need to (unless of course, you are ready to re-write your app to support that).

Still, this is a valid and popular approach that has more pros than cons for a lot of situations. But for the situations where we still need the agility to host anywhere without vendor lock-in. Here is the next approach.

Cloud-Agnostic

This means you do the same thing as Cloud-Native, but only use open source or technologies that allow running everywhere. Taking advantage of standards more than vendor-provided features.

If you are taking this route, probably you will do the following:

- OpenID / OAuth

- Host and scale your app as containers in Kubernetes

- Log in STD console output and then collect logs using ELK stack

- Monitor and manage traffic using a service mesh like Istio

- NGINX as a reverse proxy, routing

- Redis container for memory cache

- Kubernetes Configuration and Secrets for configuration

- Jobs run in containers on demand

- You get to try Dapr, a promising open-source project that allows your app to use a variety of platform as a service features like reliable service to service invocation, pub/sub, state storage and still run anywhere inside Kubernetes

This approach will give you the same flexibility of using microservices that are written in many languages by many teams. You will have minimal microservices that do their jobs well. And will give you central management, monitoring and consistent behaviours across varying services. You still have access to the unlimited infrastructure resources of the cloud but you will not be locked in, to a specific vendor. You can still run your app on your laptop (however need a bigger one).

The downside of this approach is the higher learning curve to find and integrate what you need from different sources. That you always have to keep an eye on what are you choosing to use. It also has the disadvantage of not being able to use a shiny new service that is exclusive to one of the vendors until it’s replicated by the open-source community or the other vendors. Think of how and when can you use serverless computing inside Kubernetes.

Summary

To summarize, you have 3 approaches:

- Embed all the infrastructure elements you need inside your big fat monolith application. Making it easier to deploy anywhere without dependencies. But will not work well for microservices.

- Keep common code out of your microservices, rely on your cloud vendor PaaS and be locked in, to that vendor.

- Keep common code out of your microservices, rely on open source and standards which is a little bit harder, but make you cloud-agnostic and more resilient.

I hope this way of thinking will help you make the hard choices at the beginning of your new application. In the end, you don’t have to pick only one option. You can still mix and match depending on the situation.